FRC Competition Robot

What is First Robotics?

FIRST Robotics Competition (FRC) is an international high school robotics competition often described as “the ultimate sport for the mind.”

-

The Challenge: Teams of students have a limited time (traditionally about 6 weeks) to design, build, and program an industrial-size robot (weighing up to 120 lbs) to play a difficult field game against other teams.

-

The Game: The theme changes every year (e.g., shooting balls into goals, stacking cones, or hanging from bars). Matches are played on a basketball-court-sized field with alliances of three robots each.

-

More Than Robots: It operates like a small startup. Teams must also raise funds, design a brand, and do community outreach. It heavily emphasizes “Gracious Professionalism”—competing like crazy but treating one another with respect and kindness.

It is widely considered the highest level of high school robotics due to the size, cost, and engineering complexity of the machines.

My Role

- I served as the Programming Mentor and helped with robot design as well.

- Collaborated with a team of 12 students under strict 6-week build deadlines.

- Directly designed the vision system and automated movements.

Technical Implementation

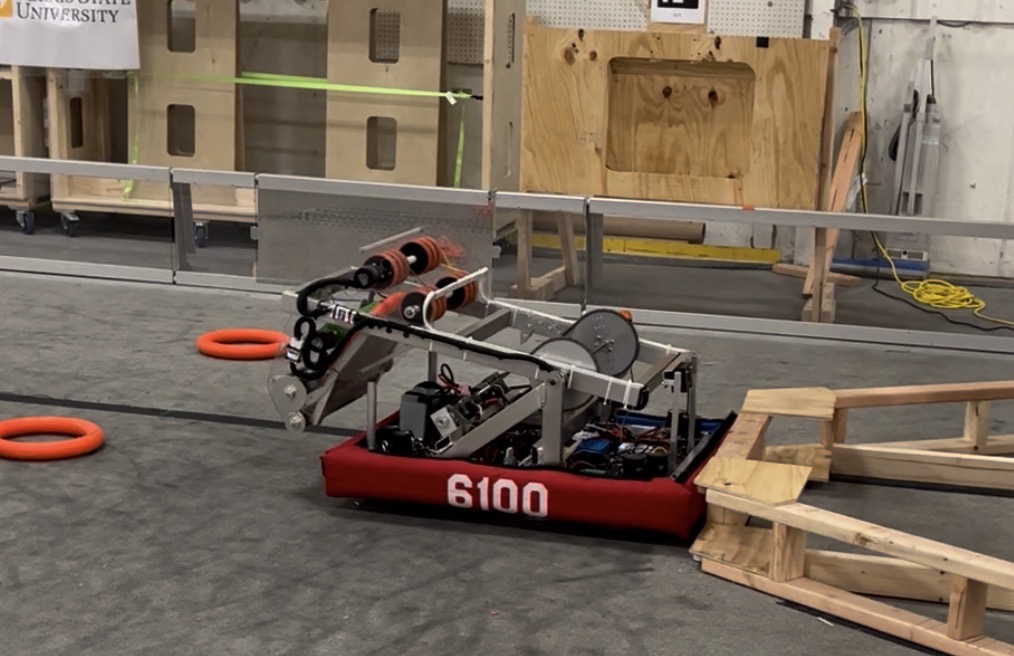

Mechanical Design

We utilized Fusion 360 to design robot parts.

- Drivetrain: Swerve Drive for omnidirectional movement.

- Intake Mechanism: Designed an over-the-bumper intake using polycarbonate plates and compliant wheels to handle game pieces at high velocity.

- Shooter: Implemented a system with adjustable angles for variable range shooting.

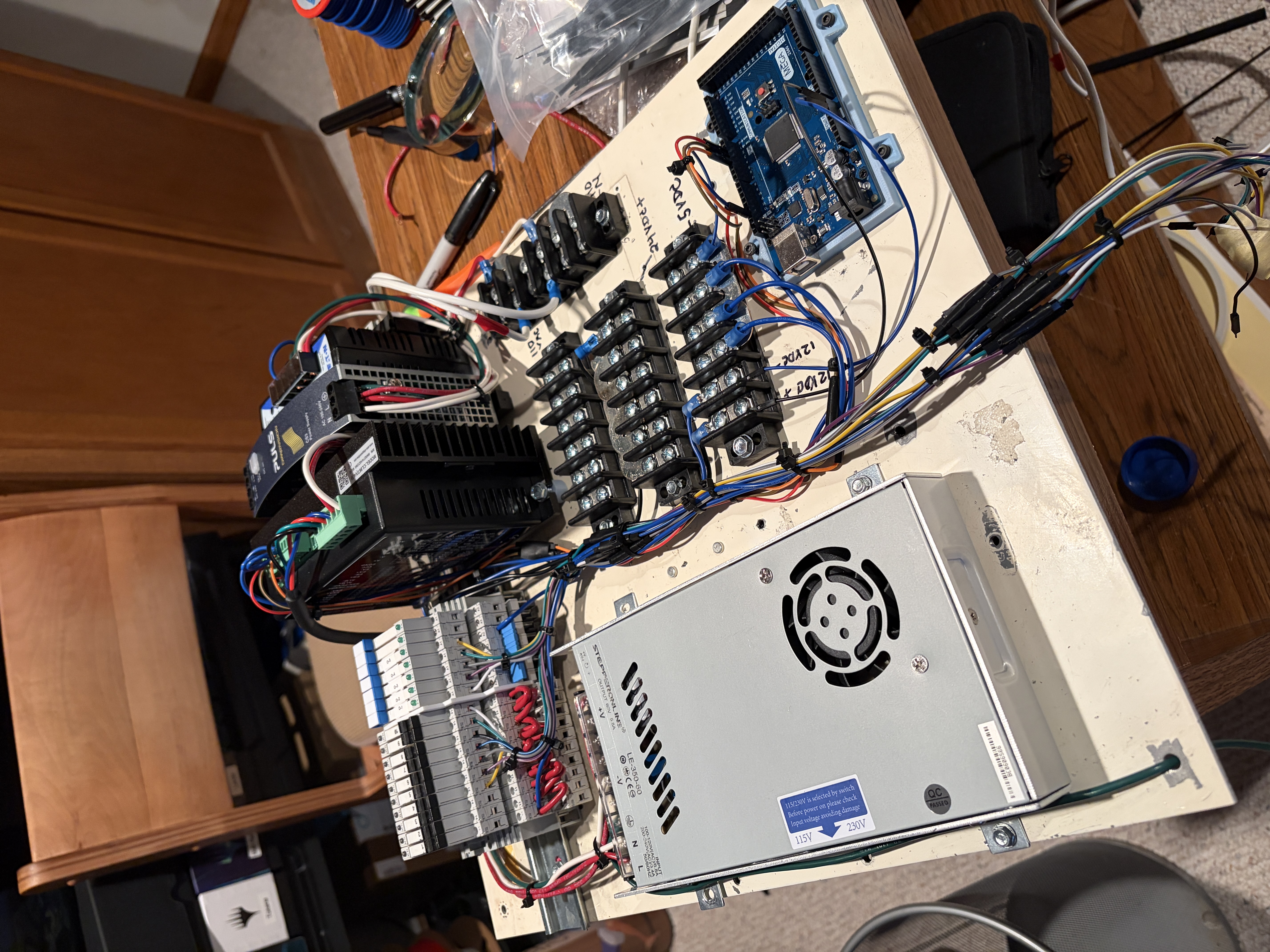

Software & Control

- Language: Programmed in Java.

- Autonomous: Used path planning algorithms to score pre-loaded game pieces and taxi out of the zone during the 15-second auto period.

- PID Control: Tuned PID loops for precise motor velocity and arm positioning.

Vision System

Implemented an Orange Pi 5 with dual cameras.

- Target Acquisition (Camera 1): Utilized AprilTag fiducials mounted on the goals to calculate real-time distance and angle. This data fed into the shooter’s wheel speed and turret angle/drivetrain rotation, enabling “shoot-on-the-move” capabilities.

- Object Detection (Camera 2): Deployed a machine learning model to detect game pieces on the field. This allowed the robot to autonomously align its intake with the nearest object, significantly reducing cycle times.

- Hardware Acceleration: Leveraged the Orange Pi 5’s Rockchip RK3588S NPU to accelerate the machine learning model, achieving high-FPS game piece detection.

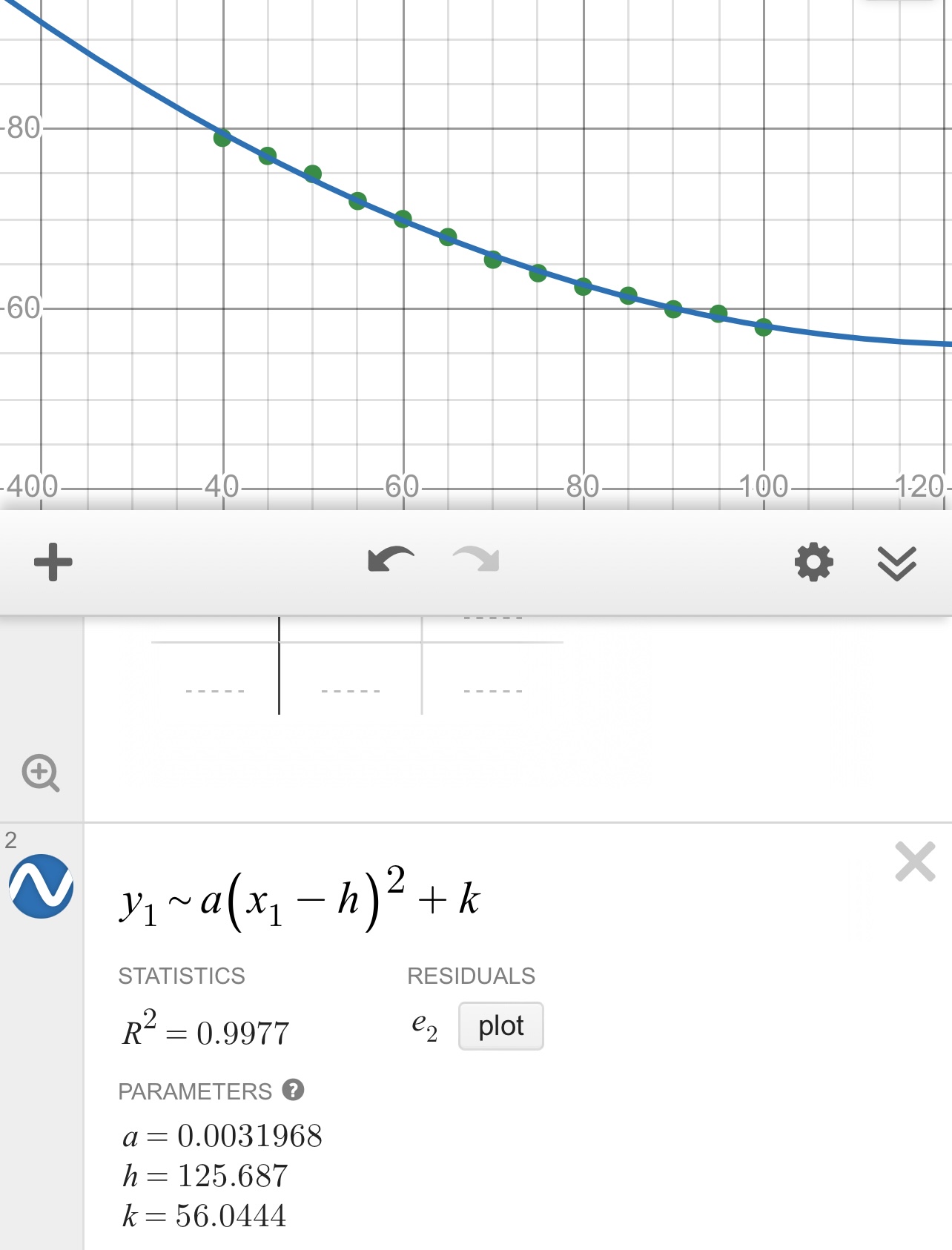

Ballistic Modeling & Control

To enable dynamic shooting from any position on the field, I developed a custom transfer function to control the shooter’s pivot arm angle:

- Empirical Data Collection: I conducted field tests to determine the ideal arm angle at various fixed distances (e.g., 5ft, 10ft, 15ft, 20ft).

- Non-Linear Regression: Analyzing the data revealed a non-linear relationship. I derived a quadratic function (polynomial regression) that mapped the vision system’s distance reading to the precise arm angle required.

- Real-Time Execution: This function ran inside the main control loop, allowing the robot to continuously calculate and adjust its trajectory while moving, eliminating the need for pre-set shooting zones.

Videos

Autonomous Video

Competition Videos

Robot number is 6100

- Match 1 robot is on Blue Team

- Match 2 robot is on Red Team

- Match 3 robot is on Blue Team